How do you know when you've come across a good process model? Do you use your gut feel or do you actually quantitatively assess how good a process model is. Interestingly enough, there's very little out there that describes how to assess whether a model is good or not.

Assessing process models is actually a very important part of the governance of process models and you want to govern your process models so that you're consistently creating valuable and useful models. One of the steps to assess process models involves being able to quantitatively assess how good they are. This will be especially useful when you've got tens, hundreds, possibly even thousands of process models awaiting your approval.

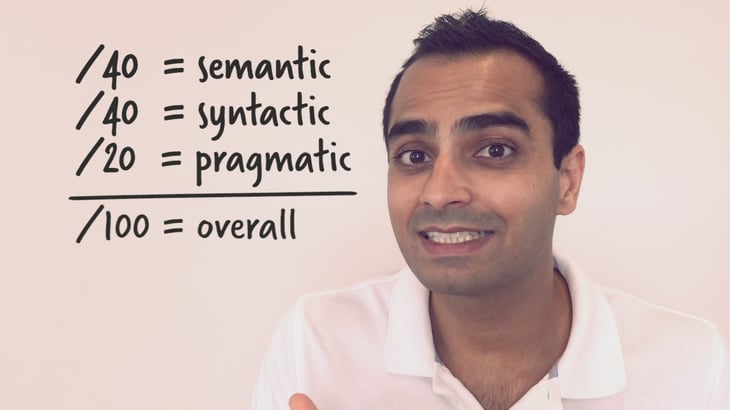

The model quality framework assesses model quality from a quantitative perspective. To break it down further, we talk about quantitative assessment in three categories.

- Semantic quality -Semantic quality refers to the accuracy and completeness of your process models.

- Syntactic quality -Syntactic quality is the performance of all your process models to your modeling standards.

- Pragmatic quality -Pragmatic quality is the usefulness and fitful purpose of your process model.

In each of these three categories, you'd have to look at variables or criteria that would help you assess quantitatively how good that process model is, so for each one of these categories, you could assign a set of scores. Semantic, syntactic, and pragmatic each have a total score.

For semantic, we can assess the total number of objects that are associated with an activity for completeness. That completeness can be easily calculated and you can assign a score.For the purposes of this article, let's say semantic quality score is 40 points.

For syntactic quality, you would be looking at conformance to your standards, so any breaches in the process model to your standards, you would deduct score again. For syntactic quality, let's assign 40 points to it.

Finally, pragmatic quality. You might want to use pragmatic quality with somebody else, somebody who's actually consuming that process model. Their feedback will give you an assessment, quantitatively, as to how good that process model is from a pragmatic perspective. For this examples, let's do 20 points.

In total, you've got 40 points for semantic, 40 points for syntactic, and 20 points for pragmatic, a total of 100 points. Out of this 100 points, you need to assign a minimum threshold. Let's say 80 points.

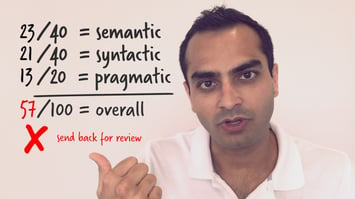

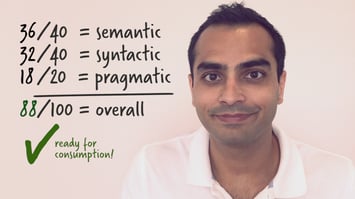

When you do your assessment against semantic, syntactic, and pragmatic quality, you now can assign a score.

Any score below 80 points, you have to send that process model back for review and back for correction. Anything above 80 points is now ready for consumption.

Now there's a way to quantitatively measure how good process models are using the model quality framework. You do this across three categories: semantic, syntactic, and pragmatic; and you assign values against each one of those areas.

Any model that passes your minimum threshold is good to go. Anything that doesn't, send it right back for review.