The Compelling Origins of Containers

A number of years ago, it become clear that the rise of container platforms was more than just a passing technology trend. A genuine technology shift was taking place that would undoubtedly change the IT industry forever.

Early indicators were everywhere. Investment by the largest software industry giants was significant, as was their marketing positioning. Pivotal and IBM were aggressive with Cloud Foundry and Blue Mix. Red Hat was progressive with Openshift and receiving industry praise. Docker Inc stormed onto the scene. Then Google started the Kubernetes opensource project with immediate impact.

Locally, there were also strong indicators. MeetUp groups were started and quickly became well-attended - especially the Docker meetups based in Australia. In the early days, the meetings were sold-out, and eager attendees weren't able to secure a spot.

After a humble start, the Kubernetes MeetUp was became very popular and continues to be so to this day. There was something brewing, and everyone wanted to be a part of it.

The Compelling Case for Containers vs. Virtual Machines

From a technology perspective, Linux containers and Docker were the first pieces to fall into place when making the case. As virtual machines revolutionized the server market, containers made a compelling case to do the same. Losing the weight of the guest operating system and the straightforward build process, Docker was easy to use and quick to deploy.

-1.png?width=723&name=Base%20Patform%20Stack%20Graphics%2016_9%20(1)-1.png) Figure 1: Virtual Machines vs. Containers

Figure 1: Virtual Machines vs. Containers

Kubernetes was equally compelling. In providing the container orchestration, including automated deployment, scaling, scheduling and application management. Kubernetes was the special ingredient that bought it all together. Kubernetes would help avoid issues like VM sprawl and low resource utilization that has plagued operations for a long time.

A Clear Direction Forward in a Containerised World

We at Leonardo identified this technology shift early and took immediate action to upskill our team to participate in this new containerised world.

Red Hat was proactive in working with partners, offering a comprehensive training program for OpenShift Container Platform.

From these beginnings, the benefits of container platforms were immediately realized. Urgently, Leonardo deployed OpenShift Container Platform clusters and began developing applications on the platform. It wasn’t long before Leonardo’s software development lifecycle displayed significant improvement and the promise of continuous integration and continuous delivery were being realised.

Leonardo’s first opportunity to deliver a customer project on OpenShift quickly followed these early enablement activities. Emboldened by the early success on the platform, our customer was also enthusiastic to replicate this success and endorsed our proposed container platform approach. Exciting times with early success. Leonardo was irreversibly committed to using containers and Kubernetes based platforms.

Getting hands on with the Openshift Container Platform

Deploying Openshift Container Platform was initially straightforward. Basing the original deployments on Red Hat’s reference architecture and utilising Red Hat’s deployment scripts and playbooks was turnkey for these early requirements. Leonardo used these assets to great effect internally alongside our inaugural customers to deploy Openshift Container Platform clusters.

As our needs and our customer’s needs evolved, so too did the dependence on these reference architecture assets. With a constant stream of new releases, these assets started to break, fall behind, and in some cases, hold us back. It became quickly apparent that we needed our own solution. Speed of deployment was becoming a key factor in our customer engagements and also in deciding to build our own assets to deploy clusters.

After making the decision to build our own assets, several objectives were agreed. These objectives were as follows:

- Cluster deployment must be efficient, reliable, and repeatable

- A simple workflow was essential;

- Ansible automation under source code management was non-negotiable

- Variable driven for flexibility and customization was needed

- Comprehensive documentation was essential (without rewriting product documentation)

- Less experienced resources must be able to execute the workflow

- All assets are transferable to customers.

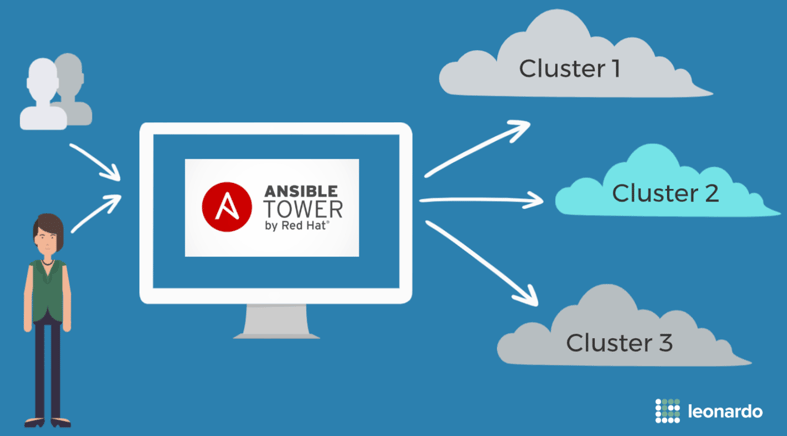

With these objectives in mind, Ansible Tower was chosen as the centralized automation engine.

Why we chose Ansible Tower to automate deployment

Ansible tower provides a central system that allows automation playbooks to be reused across different inventory files with reliability and repeatability. Auditability and role-based access control are valuable capabilities that come in addition to the core automation engine.

Ansible is widely used across the open source industry for automation, configuration management, cloud provisioning, and application deployment. With it’s easy to read YAML playbook syntax and the agentless SSH architecture, it was the perfect solution for us.

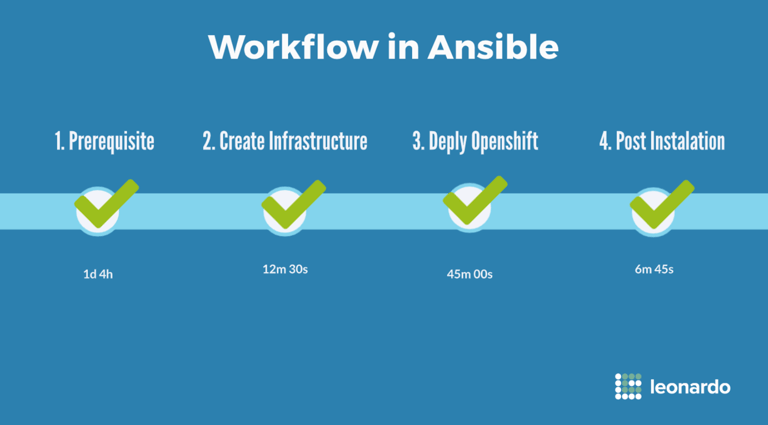

A simple workflow with few steps was intentionally developed in Ansible. Simple steps were essential to ensure that the process was easy to follow for all skill levels and consistency is achieved.

Prerequisite information and credentials are gathered in order to prepare for the deployment. As more information is provided, the deployment is more customized to customer requirements. Intelligent defaults are used where customization is not required.

The infrastructure is created based on the information provided. This includes the virtual machines, networking, storage, and security. The default architecture provides for a full highly available cluster with multiple masters and compute nodes.

Once the infrastructure is provisioned, Openshift is deployed onto the infrastructure. The bridging of this step is completely seamless because the infrastructure step builds the inventory required to install OpenShift.

Lastly, the post installation tasks are executed. This stage is very important in preparing the Openshift environment to be developer and production ready. Our Base Platform Stack includes the developer tools and operational applications required on day 1, as well as the configuration, security, and non-functional capabilities for day 2 operations.

Addressing the lack of production readiness with Openshift clusters

One thing that has been evident at many organisations is the lack of production readiness of the deployed Openshift clusters. Often organisations who have deployed Openshift have a cluster that lacks the required configuration and the developer/operations tooling required for long-term application development / secure operations. Too often clusters are vanilla - simple out of the box default setups that don't offer any production readiness.

How we made Openshift Container Platform Production Ready

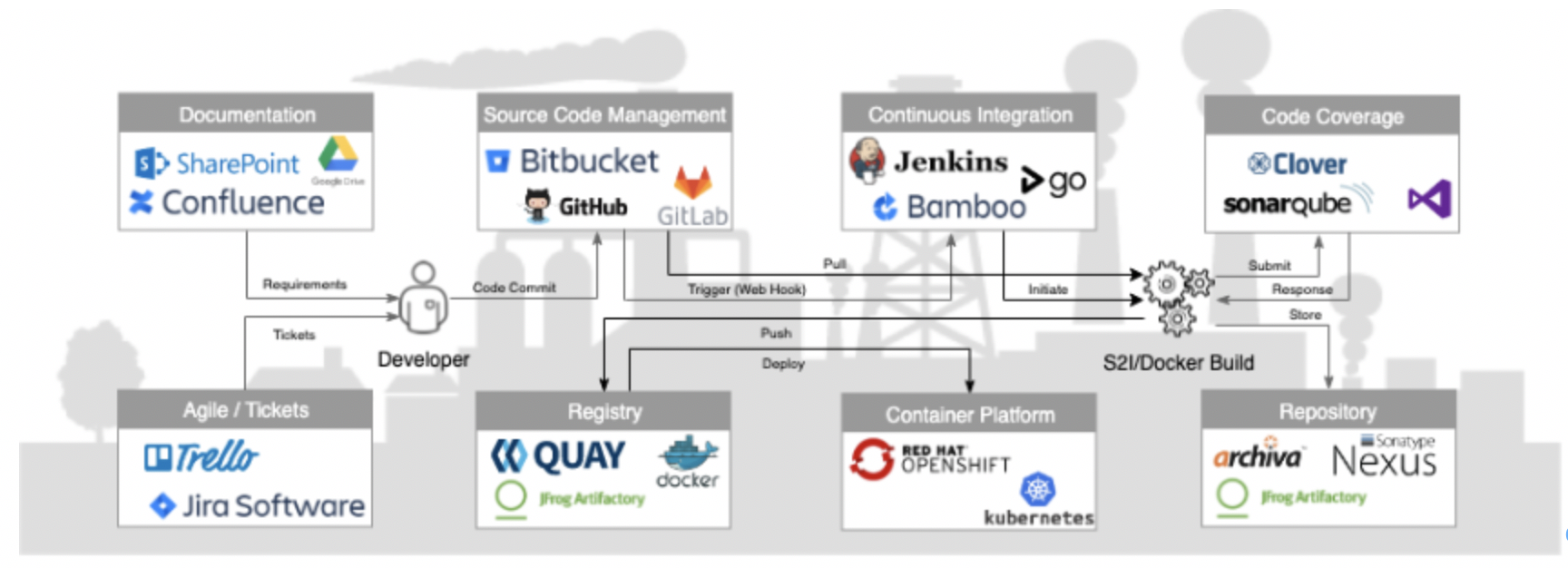

To be production ready, developer tooling not only needs to be deployed, it needs to be stitched together to form a cohesive developer experience (what we call an effective software factory). To achieve the goal of getting a software factory running, developer tooling should be installed, configured, tested, and delivered to the development teams as part of the platform build. This would include:

- Continuous integration, such as Jenkins, Bamboo, GitLab, TeamCity, or Spinnaker

- Artifact repository, such as Nexus, Artifactory, and Apache Archiva

- Source code management, such as GitLab, Bitbucket, Gogs

- Build tools, such as Buildah, Maven, Gradle, and Openshift source to image

- IDE support, such as VS Code, Eclipse, Visual Studio, and Intellij

- Automated testing tools, such as Selenium, Citrus,

- Code coverage and quality, such as SonarQube, and Atlassian Clover

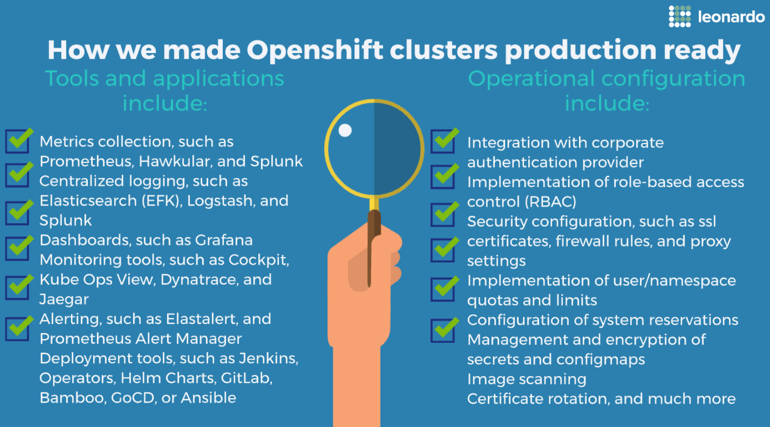

On the operational side of the DevOps equation, there are tools/applications, processes, and configuration required for production readiness.

Tools and applications would include:

- Metrics collection, such as Prometheus, Hawkular, and Splunk

- Centralized logging, such as Elasticsearch (EFK), Logstash, and Splunk

- Dashboards, such as Grafana

- Monitoring tools, such as Cockpit, Kube Ops View, Dynatrace, and Jaegar

- Alerting, such as Elastalert, and Prometheus Alert Manager

- Deployment tools, such as Jenkins, Operators, Helm Charts, GitLab, Bamboo, GoCD, or Ansible

![Metrics Dashboard [Grafana] & Centralised Logging [Kibana]](https://blog.leonardo.com.au/hs-fs/hubfs/image-8.png?width=800&name=image-8.png)

Operational configuration would include:

- Integration with corporate authentication provider

- Implementation of role-based access control (RBAC)

- Security configuration, such as ssl certificates, firewall rules, and proxy settings

- Implementation of user/namespace quotas and limits

- Configuration of system reservations

- Management and encryption of secrets and configmaps

- Image scanning

- Certificate rotation, and much more

The landscape of technology choice is expanding exponentially, while continuing to move at a rapid pace. The large time allocation required by understanding these capability needs should be delivered under SLAs, RTOs, and RPOs imposed by stakeholders.

The chasm between deployment and adoption of container platforms

Deploying a container platform and adopting it are two different challenges. Even with the early success and evidence of the platform’s benefits, there is still significant change management and a learning curve to conquer. To successfully transform existing development practices, skills sets, workflows, and developer habits; patience and persistence is required.

Leonardo was in a fortunate position. As a smaller emerging company, we were able to navigate this journey through proactive training, smart recruitment, peer mentoring, and management support for this strategic direction.

This is often not the case for many organisations. Leonardo has first-hand experience watching customer Openshift Container Platform implementations being left to languish because developers and operations fail to adopt them into their BAU processes.

Initially this was very surprising. The initial excitement and expectations of the new container platform is very high: especially considering that project plans are in place, training courses are scheduled, and management is onboard. Developers are anticipating significant things.

10 common adoption problems with container platforms

Figure

Figure

Upon seeing too often that little or nothing happens, we went searching for the answers. There are many reasons for adoption problems, including ...

- Developers have to support old systems before they can ‘play’ on the new platform

- Operations are not sure if the platform is ‘production ready’

- Not everyone has been trained or ready to use the new platform

- Uncertainty on how to do things on the platform (i.e. how it equates to the old way)

- Developer tools are not yet integrated (eg SCM, CI/CD, build, etc)

- Habits to build locally versus build on the platform

- Backups have not been implemented, tested or trusted

- The platform or a key component is already versions behind

- There are no examples to follow to lead the way (i.e. templates, pipelines, etc)

- Fear of change by some development and operations personnel

There are many more reasons, but the above list illustrates that there are numerous issues that organisations face when adopting a container platform. This is an all too common ‘adoption chasm’.

Fixing these adoption problems

Fixing these adoption problems

After successfully guiding other customers onto container platforms, several clues have emerged as to why some organisations are more successful than others crossing this adoption chasm.

- Create trust in the platform and enable willingness to take on some risk. With a different method for building and deploying applications on a container platform, organisations and individuals must trust that the platform will run their workload as securely and reliably as it previously ran on the old infrastructure, typically a virtual machine. The risk is often perceived because the former assurances, such as VM backups and DR processes, must be let go to move forward.

- Encourage enthusiasm and create atmosphere of experimentation. The successful adopters have an enthusiasm and thirst, to try new things. Many individuals find walking a path for the first time less assuring than following a well-worn process. This begins with the small things like the unease of no longer needing to request a new environment before proceeding to deploy an application. Individuals find comfort with process and consensus.

- Build a culture that is adaptable, agile and change friendly. Organisations with strong processes, deep organizational structures, heavy governance, and slow decision making are more likely to have a difficult time making the transition. This is because container platforms free individuals to innovate and reward organisations with flat structures and quick decisions.

The most successful way to cross this ‘adoption chasm’ without forcing change to an organisation’s personnel, appetite for risk, or culture is to seek a trusted partner. A partner with experience to help with both technology and personnel.

On the technology side, an experienced partner can help prepare the platform to be production ready. Integrating the developer and operational tools/applications into a cohesive environment. Configuring the platform for continuous secure operations in alignment with the organisations desired outcomes and technology preferences.

More importantly, a trusted partner will focus on engaging the personnel side of the equation.

The partner should be prepared to mentor and guide individuals on their learning curve. This would include building example templates, pipelines, deployments, automation scripts, build artefacts, and the like so those individuals have something they can reference, duplicate, and modify.

Furthermore, a trusted partner would show how to accomplish tasks, answer questions, explain concepts, share information, and document these assets for later reference. As the old saying goes, ‘Give a man a fish and you feed him for a day; teach a man to fish and you feed him for a lifetime’.

Conclusion

Existing technologies and practices have been learnt over a long period with this cumulative knowledge and experience embedded in individuals. Much of this know-how is still relevant in the container platform environment. There is, however, an undeniable change that requires some upskilling, and in some cases, un-learning. All necessary to adapt to this new world of developing and running applications to run in containers on Kubernetes based infrastructure.

Organisations are advised to invest in their personnel to receive the significant benefits that await those who successfully transition.